Global Platform

Integrity and

Community Growth

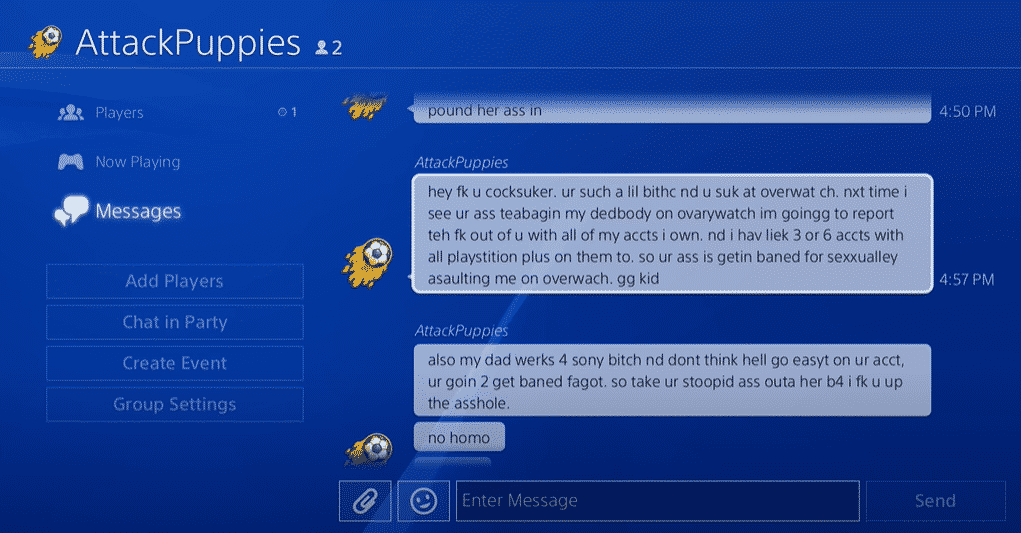

We combine human expertise with advanced enforcement tools and real-time moderation to transform every user interaction, content post, and live stream into a secure, trusted, and thriving environment.

We combine human expertise with advanced enforcement tools and real-time moderation to transform every user interaction, content post, and live stream into a secure, trusted, and thriving environment.