Due to the social distancing requirements of COVID-19, online platforms have become vital to people’s work, education, social lives, and leisure.

The role of content moderators of these platforms is often the unsung heroes of the pandemic, keeping us safe, one click at a time, from those that would do us harm.

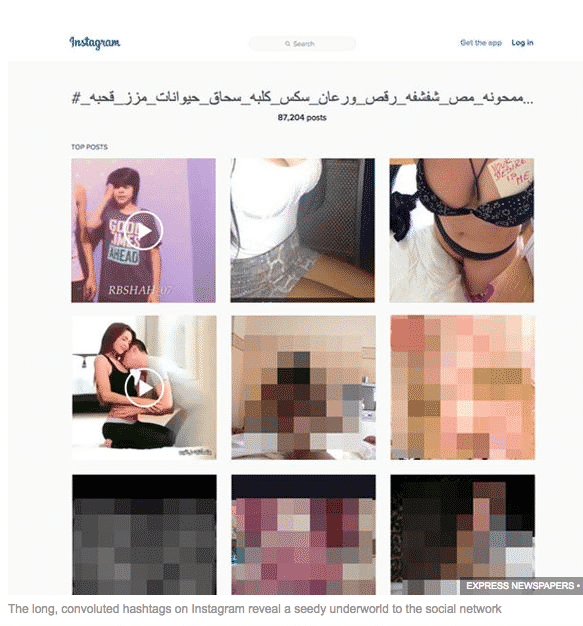

Content Moderators are protecting us across All Digital Platforms (Source: TamPa Bay Times)

As our online lives were no exception, in this age of disinformation, potential online harms such as identity fraud, phishing, child exploitation, and online predators possess a serious threat to the mental health of users of online platforms. Hence, moderating the content of online platforms has become crucial as we, and our loved ones, spend more and more time connected through digital spaces. Content moderators block off posts that contain inappropriate content such as hate speech, bullying, harassment, and spam. Essentially, what a content moderator does it to encourage positive conversations about the brand or your online platforms by following a set of community guidelines. What follows is a brief overview of what is a content moderator and how to become a content moderator, as we uncover the particular set of skills and traits that are needed for this demanding yet indispensable job.

Table Of Contents

- What Is A Content Moderator?

- What Does A Content Moderator Do? (Job Responsibilities, etc.)

- How To Become A Content Moderator?

- Important Skills Required For Content Moderators

- Common Type Of Contents Reviewed By Content Moderator

- Conclusion About Content Moderators

1. What Is A Content Moderator?

A Content Moderator takes responsibility for approving content and ensuring that content is placed in the correct category before getting it live on a website or an online platform. The content moderator also evaluates and filers the submitted content of users to be in line with the content requirements and standards of a particular platform or online community. In a nutshell, the core responsibility of a content moderator is to help online platforms/online communities establish their credibility and safely provide real-time protection for users by removing inappropriate and potentially harmful content before it reaches the users.

2. What Does A Content Moderator Do? (Job Responsibilities, etc.)

Content moderators are specifically trained to be keenly focused on the crucial part of the uploaded content. Even a tiny piece of information in a listing can reveal and inform the moderator of their next step. It’s also crucial to stay updated on the latest scams and frauds as uploads may appear innocent at first but become more sinister than first. For example, a user can post unlimited videos on Facebook or Youtube channels, but that doesn’t mean that the user can upload any content. Users can’t add unwanted subject matter such as adult, spam, indecent photos, profanity, and illegal content. The team of content moderators working at Alphabet Inc (Youtube’s Parent Company) and Facebook makes sure every user-generated content has to pass the company’s content guidelines before and after publishing.

Job of a Content Moderator

On the other hand, vulnerable people can potentially be exposed to scams or disturbing content. And let’s face it, we cannot watch over our kids 24/7 and make sure they aren’t protected from anything distressing and harmful. Even sites specifically aimed at children can fall victim to unpleasant content (YoutubeKid could be an example). All content moderators around the globe are on a mission to limit the risk of Internet users reading/watching/listening to the content they may consider upsetting or offensive. Hence, this is where a content moderator does their job – by blocking content from harming their business’s website or Internet to make it a safe place for all end-user and other relevant stakeholders. Content moderators may also respond to user questions and comments on social media posts, brand blogs, and forums. Content moderators’ responsibilities include ensuring content creators and managers correctly place their content items and ensure they’re free from scams, errors, and any illegal or copyrighted material. They uphold ethical standards and maintain legal compliance of digital businesses and online communities by performing these tasks.

3. How To Become A Content Moderator?

Content moderation isn’t just a mechanical task. It’s a demanding role that requires an array of skills. Content moderation involves developing the following skills:

- Analytical skills for determining whether the content complies with guidelines

- Patience and knowledge in reviewing potentially sensitive content while maintaining a high pace of accuracy

- Linguistic or Industry-specific knowledge of moderating comments

Content moderators may work for a specific brand/company, or they can work for a content moderation company. The main difference between internal and external content moderation is whether the moderation is done by an organization’s employees or by an outsourcing company like Leap Steam.

Often, it’s a common dilemma: whether to choose one option or another. However, let us briefly touch on the difference between the two.

Content moderators who work for a company learn the nitty-gritty details of that company. They will become experts at dealing with the specific type of content required (by the guidelines) to be posted on the brand’s communication channels. However, many companies also choose not to hire in-house teams but rather outsource their moderation needs to a content moderation provider. As outsourced content moderators can be assigned to different projects, each may involve multiple tasks. Companies or business owners can focus more on their core business objectives and other larger scales of operations, etc. In the following part, we will go through a content moderator’s specific skills and traits!

4. Important Skills Required For Content Moderators

A content moderator must figure out what content is allowed and what isn’t—following the preset standards set by a platform. Skills that are essential such as

- Analytical skills

- Linguistics knowledge

- Flexible and able to adapt

- Good communication skills

- Creativity

As covered previously, analytical skills are required to identify user behaviour in various online channels, such as forums, blogs or social media pages and assess if the content complies with the guidelines accordingly. Content moderators also need to make the proper analysis by understanding different societies’ cultures. Some texts and images may be considered offensive in one part of the country but perfectly acceptable in another. For example, in India, some texts and images may be deemed obscene, while in the United States, they may not be. It also helps companies create a better brand experience for their end-users by ensuring a safe and more effective user interface. It’s essential to be a linguistics expert in international platforms that host users across the globe. Large companies with multiple branches worldwide often look for multilingual copywriters, account managers, content managers, and moderators who can help write, manage and moderate content in different languages, helping improve their online reputation. The moderation process is constantly changing. With new guidelines and implications, the moderation process is constantly evolving too. Hence, a content moderator needs to be flexible and adapt to the ever-changing requirements of each online channel. Sometimes, Content Moderators also interact with end-users, organise monthly online raffles and giveaways, perform surveys, and answer customer questions. Hence, good communication skills and creativity are essential for success.

5. Common Type Of Contents Reviewed By Content Moderator

User-generated content is increasing every single day. Content moderation requires constant vigilance to keep up with new technologies. Today, text, images, videos, and audio are the building blocks of most user-generated content. They’re also the most common types of content that people post online. However, the combinations between these formats continue to grow, with new ones emerging continuously. Think of the possible threats that may arise from platforms such as Facebook, Twitter, Instagram, and LinkedIn. Here are some of the common content threats often reviewed by content moderators:

Scams

Scams are as old as time, and unfortunately, Internet fraud is becoming more common. And for many online marketplaces, it’s often well-hidden or undetectable. Online shopping scams, dating & romance scams, fake charity scams, and identity theft are just some of the increasingly sophisticated attempts by criminals that content moderators try to eliminate from online platforms. 74% of consumers see security as the essential element of their online experience, so it’s necessary to have the proper protection.

Online Harassment

It is another complex and growing problem. It remains a common experience despite decades of work to identify unruly behaviour and enforce rules against it. Consequently, many people avoid participating in online conversations for fear of harassment. Each platform will have its way of enforcing its regulations, and it’s up to the moderators to vigilantly decide if the content violates these policies. This also applies to online gaming communities, where there is an increasing need for game moderation.

Violence And Abuse

Unfortunately, some people feel the need to produce, upload, and share disturbing content showing the violence against and the abuse of animals, women, children, and other vulnerable people. Content Moderators are on the front line in the war against this alarming content.

Extremists

Exploits social media for various reasons, from spreading hateful narratives and propaganda to financing, recruitment, and sharing operational information. The role of content moderation is to decide how best to limit audience exposure to extremist narratives and maintain the marginality of extremist views while being conscious of the rights to free expression and the appropriateness of restrictions on speech.

Copyright Infringement

Whether Internet intermediaries, such as internet service providers (ISPs), search engines, or social media platforms, are liable for copyright infringement by their users is a subject of debate and court cases in several countries. As things stand, U.S. and European law states that online intermediaries hosting content that infringes copyright are not liable so long as they do not know about it and take action once the infringing content is brought to their attention. Content Moderators must be attentive and spot any potential threats that could lead to litigation from the copyright holder. As new technologies develop, the types of user-generated content that may require content moderation screening is likely to increase. To learn more about the technicalities of content moderation, such as pre-content, post-content and reactive content moderation, we have also covered a more comprehensive article here.

6. Conclusion About Content Moderators

Any forward-looking business with an eye on expansion MUST be online. And for a company to be truly online, it must engage across multiple platforms, seeking input and user-generated content (UGC) as it goes. Social media and other digital platforms are groaning under the weight of new content. Consumers and companies alike are uploading volumes of new content at staggering rates. 300 hours of video are uploaded to YouTube every minute, and 300 million photos are uploaded to Facebook every day! This rate is expected to increase as people spend more and more time at home under lockdown conditions. Inappropriate, harmful, racy content or illegal behaviour hosted on a company’s platform can be a severe problem for a brand’s reputation. Content moderators must diligently scour the Internet, seeking out and eliminating the content that can cause harm, not just to the brand but to the customers of that brand too. In short, every business with an online presence needs to consider the potential benefits of outsourcing content moderation. To learn more about outsourcing your content moderation processes and the value that it can bring your business, contact Leap Steam for a free consultation and trial.

Frequently Asked Questions About Content Moderators

What Are The Tools Used For Content Moderation?

Automated tools are often used in content moderation processes. For example

- Digital Hash Technology

- Image/Voice Recognition

- Natural Language Processing (NLP)

These tools aid content moderators to identify offensive content posted by bots or users on the online platform such as blog posts, message boards and user reviews, etc.

What Is The Content Moderation Process?

The process of content moderation involves screening and monitoring online user-generated content.

Platforms must moderate content to ensure it complies with established guidelines of acceptable behaviour that pertain specifically to the forum and its user base.

How Much Does Content Moderation Cost?

It depends on the business scale, type of moderation and the specific guidelines you may have. To fully understand the unique needs of your business and online platforms, feel free to contact us to learn more about Leap Steam content moderation services.

As a bonus, we provide a complimentary consultation and trial for our services!

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Your article helped me a lot, is there any more related content? Thanks!