Wondering what is content moderation? Curious how it may be applied to your business?

Content moderation is the practice of monitoring and applying a predetermined set of rules and guidelines to User-Generated Posts in order to best determine whether or not the communication (particularly a Post) is acceptable.

The work of content moderators has been described negatively in the past or overlooked in most cases, as many businesses did not know about its use cases. Moderating inappropriate online content is an important task that needs to be done to protect innocent users from horrible content.

Leap Steam relies on the human touch to deploy the first moderation cycle to ensure fast, accurate and accurate moderation. And human intervention will always be necessary to determine the “grey areas” which cannot be captured by AI and requires the human touch.

While content moderation is not a one-size-fits-all solution, what follows are some essential and important questions every business needs to ask itself about this topic before looking for a vendor for content moderation.

In this article, we will cover the top 10 things frequently asked about content moderation and how Leap Steam can cater for your business needs. Let’s hop right into it!

Table Of Contents

- The Need For Content Moderation

- What Is Content Moderation

- The Role Of Content Moderators

- Definition & Types Of Content Moderation

4.1 Pre-Content Moderation

4.2 Post-Content Moderation

4.3 Reactive Content Moderation - Why Content Moderation Is Important For Your Business

- “Human moderation” VS “AI moderation” (Which Is Better)

- Can Content Moderation Companies Help Improve Customer Relations

7.1 Sentiment Analysis

7.2 Social Listening - What Types Of Content Can Be Moderated

8.1 Text

8.2 Images

8.3 Videos - Why Should I Outsource My Content Moderation Needs?

- Advantages Of Working With Leap Steam (Outsource To A Vendor)

1. The Need For Content Moderation

A survey of US digital ad buyers found that traditional digital marketing techniques, such as pop-ups, banners, autoplay videos, and other formats, are annoying to consumers. More people than ever are using ad blockers. Marketers today are faced with the challenge of finding new ways to reach their customers.

According to Forbes Magazine, “Content is King”. They say that “media has become democratized” and that marketing now “centres around the customers rather than itself. It attracts people rather than interrupts them…”.

More than 8 in 10 consumers say “user-generated content”, in the form of discussions or reviews & recommendations from people they don’t know has a major influence on their purchasing habits. User-generated content (or UGC) used in a marketing context has been known to help brands in numerous ways. UGC encourages more engagement with its users and increases the likelihood that the content will be shared by a factor of two. It also builds trust with consumers as a majority of consumers trust content created by other users over branded marketing.

UGC can allow for better brand-consumer relationships with 76% of consumers trusting online reviews as much as recommendations from their own family and friends. UGC boosts SEO rankings for brands. This leads to more traffic being driven to a brand’s website and more content is therefore linked back to the website. It can lead to an increase in sales too, as 63% of customers are more likely to purchase from a site that has user reviews.

All of this is particularly true of Millennials. Millennials have recently overtaken Baby Boomers as the generation with the greatest combined purchasing power in history, with a collective spending power of more than $1.4 trillion per year. In short, user-generated content drives customers to your site, encourages engagement with your brand, helps to position your site on the front page of search engines, develops better brand-consumer relationships with a vitally important demographic and can increase company sales and revenue.

BUT! The biggest problem lies in the fact that often the content that is provided by the users may not always be what the brand would like to be associated with. And every day and every minute, there are thousands of content being posted online – which can be extremely hard to be monitored consistently. This is why the role of content moderation is required to prevent such incidents from happening.

The content moderator’s job ranges from making sure that platforms are free of spam, content is placed in the right category, and users are protected from scammers to reviewing and analyzing reports of abusive or illegal behaviour and content. They decide, based on a predetermined set of rules and guidelines, as well as the law, whether the content should stay up or come down.

2. What Is Content Moderation

Content moderation simply refers to the practice of analyzing user-generated submissions, such as reviews, videos, social media posts, or forum discussions. Based on predefined criteria, content moderators will then decide whether a particular submission can be used or not on that platform.

In other words, when content is submitted by a user to a website, that piece of content will go through a screening process (the moderation process) to make sure that the content adheres to the regulations of the website. Unacceptable content is therefore removed based on its inappropriateness, legal status, or its potential to offend.

What Is Content Moderation

Content moderation is a common service utilised by many businesses with online platforms that rely on user-generated content (forums, online communities, etc.) or allow user-generated content to boost interaction or sales (reviews, pictures, etc.)

There are also different types of content moderation, such as pre-moderation, post-moderation, and reactive moderation which will be covered in our article laterwards.

3. The Role Of Content Moderators

Now that we have told you what is content moderation, you might be wondering who does the job of content moderation? This role is usually done by content moderators.

Content moderators filter posts, comments, reviews, or live chats chat by applying a predetermined set of rules and guidelines. They will protect your reputation by eliminating content that may expose your brand to security risks or legal issues.

Protecting your brand and your users from such content is paramount, however, the costs of running and maintaining an in-house moderation department can be detrimental to the ability of the company to grow. Outsourcing your content moderation needs to a company like Leap Steam provides the perfect sustainable solution.

Content moderating can be done via human moderation or automated moderation, which means there can be a human touch or element behind the role of content moderators. However, whichever method it is the role is still the same – which is to filter content based on a set of pre-determined rules and circumstances!

4. Definition & Types Of Content Moderation

There are several different types of content moderation but they usually fall under the umbrella of one of these three choices:

4.1 Pre-Content moderation

With this type of moderation, a screening process takes place after the users upload their content. If the content passes the platform’s criteria, it is allowed to be made public. This method allows the final, publicly viewed version of the website to be completely free from anything that could be considered undesirable.

The downside of pre-moderation is the fact that it delays user-generated content from going public. This can leave your users feeling frustrated and unsatisfied. Another disadvantage is the high cost. Maintaining a team of moderators tasked with ensuring top-quality public content can be expensive. Quick and easy scalability can be another issue. If the number of user submissions increases suddenly, the workload of the moderators also increases. This could lead to significant delays in content going public.

This method of moderation is best suited to an online platform where the quality of the content cannot be compromised under any circumstances.

4.2 Post-Content moderation

This moderation technique means the content is displayed on the platform immediately after it is created. This is extremely useful when a quicker pace of public content generation is desired. The content is still screened by a content moderator, after which it is either deemed suitable and allowed to remain or considered unsuitable and removed.

This method has the advantage of promoting real-time content and active conversations.

The disadvantages of this method can include legal obligations and difficulties for moderators to keep up with all the user content which has been uploaded. The number of views a piece of content receives can have an impact on the platform and if the content strays away from the platform’s guidelines, it can prove to be costly.

Therefore, the requirement is to have the content moderation and review process to be completed within as quick a time frame as possible.

4.3 Reactive Content Moderation

With reactive moderation, users have the responsibility to flag and react to the content which is displayed to them in real-time. If the members deem the content to be offensive or undesirable, they can react accordingly and report it if needed. A report button is usually situated next to any public piece of content and users can use this option to flag anything which falls outside the community’s guidelines.

This system is extremely effective when used in conjunction with a pre-moderation or a post-moderation setup. It allows the platform to identify inappropriate content that might have slipped by the community moderators and it can greatly reduce their workload.

However, this style of moderation may not make sense if the quality of the content is paramount to the reputation and status of the platform.

Content is king, but it must be monitored to protect online users. While there are different types of content moderation available, it boils down to your business needs, types of user-generated content and how wide or specific your user base is. So it’s best to understand which best fits your needs and what types of content moderation you may need to help maintain your brand reputation & online community!

To learn more, schedule a complimentary call with us today!

5. Why Content Moderation Is Important For Your Business

Social media and other digital platforms are growing exponentially with new content. Consumers and companies alike are uploading content at staggering rates. 300 hours of video are uploaded to YouTube every minute and 300 million photos are uploaded to Facebook every day!

Because of the sheer amount of content generated every second, Inappropriate, harmful, or illegal behaviour hosted on a company’s platform can be a serious problem for the reputation of a brand. Considering the young age of many internet users, the need for constant and vigilant moderation is apparent.

Image moderation, video moderation, and text moderation are crucial to ensure only appropriate, user-generated content is posted, and the online experience of your users is free from anything objectionable.

Online interactive communities, such as message boards, forums, streaming sites, chat rooms, or image hosting services, live or die based on the content provided by their users. The need to swiftly identify and deal with inappropriate material amongst this user-generated content is critical to a business’s development and maintaining a pleasant online community and reputation.

6. “Human moderation” VS “AI moderation” (Which Is Better)

Human moderation, or manual moderation, is when humans manually conduct the tasks of image moderation, video moderation, and text moderation. The human moderator follows rules and guidelines specific to the site or app. They protect online users by keeping content that could be considered illegal, a scam, inappropriate, or harassment, off the platform.

Automated moderation means that any user-generated content submitted to an online platform will be accepted, refused, or sent to human moderation, automatically – based on the platform’s specific rules and guidelines. Automated moderation is the ideal solution for online platforms that want to make sure that qualitative user-generated content goes live instantly and that users are safe when interacting on their site.

While artificial intelligence (AI) has come a long way over the years and companies continuously work on their AI algorithms, the truth is that human moderators are still essential for managing your brand online and ensuring your content is up to the required standard.

Humans are still the best when it comes to reading, understanding, interpreting, and moderating content. Context and subjectivity matter a great deal.

Because of this, great businesses will make use of both AI and humans when creating an online presence and moderating content online. Leap Steam follows this “belt and braces” approach to moderation.

Leap Steam’s content moderators analyzes content almost instantaneously, and based on predefined criteria, decides to approve, refuse or, in the case of ambiguous or contextual content, send it to the experts in the manual moderation team for final judgment.

Leap Steam’s content moderation relies on AI technology to deploy the first round of moderation to ensure fast results! The use of a human will always be necessary to determine “grey areas” that require a human touch.

By combining our simple integration with moderation tools with the expertise of our workforce, clients are assured of a double safety net.

7. Can Content Moderation Help Improve Customer Relations

The short answer here is “yes”. Leap Steam can enable their clients to gain a deeper understanding of how consumers feel about their brand. This is done in two main ways:

7.1 Sentiment Analysis

Sentiment analysis, also known as ‘opinion mining’, can be a powerful tool to generate useful information about the conversation surrounding your company and products. By analyzing comments, reviews, and feedback, Leap Steam can quantify customers’ feelings into accurate insights, thus producing a reliable measurement of your community responses that can be used to improve your marketing strategy.

Sentiment analysis helps to gauge public opinion, conduct nuanced market research, monitor brand and product reputation, and understand customer experiences better.

7.2 Social Listening

Social listening allows brands to track, analyze, and respond to conversations about them on social media. It’s a crucial modern component of audience research. It’s a reliable source to learn more about your customers’ interests, behaviours, experiences, and how they respond to your company’s message.

Leap Steam’s team monitors social media channels to observe customer interactions, mentions, and keywords to develop useful insights from community interactions to strengthen the connection with a brand’s target demographics.

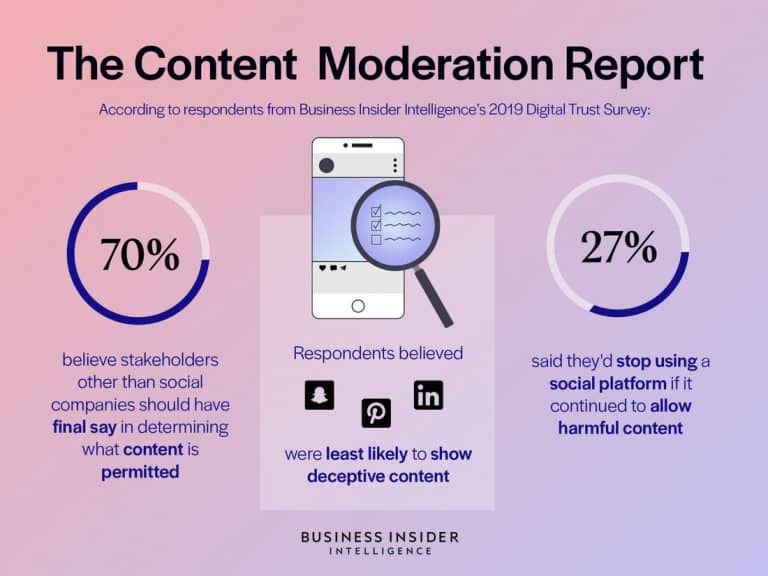

Content Moderation Report of Business Insider

Social media platforms would lose 27% of users if the content is not moderated!

Content moderation helps to maintain your brand reputation, generate customer loyalty and most importantly it helps to keep user-generated content on your online platforms trustworthy.

Being trustworthy and having good quality content is important to your customer base or potential prospects, in the eyes of Google, it essentially also helps to improve your visibility online.

8. What Types Of Content Can Be Moderated

Moderation can be used for any kind of content, depending on the platform’s focus. For example, moderation can be used for text, images, videos, and live streaming.

8.1 Text

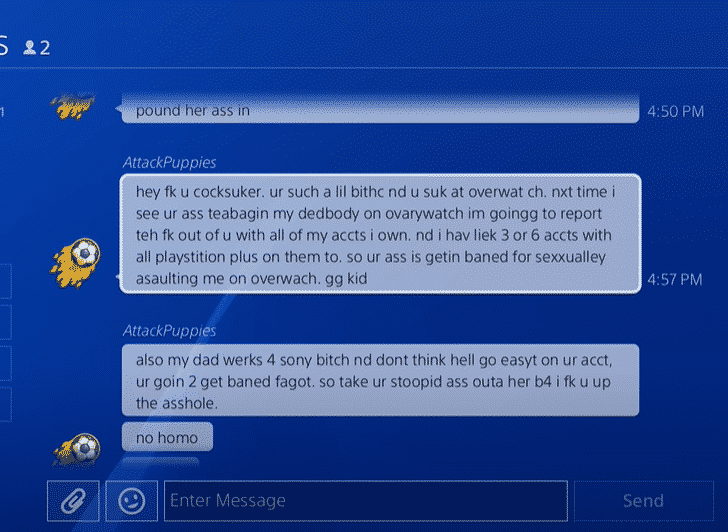

Text posts are everywhere, and they can accompany any type of visual content. That’s one reason why moderating the text on any kind of platform with user-generated content is one of the use cases. Moderating text manually on online platforms can prove to be quite a challenge.

Finding offensive keywords is often not sufficient because inappropriate text can be composed of a sequence of perfectly acceptable words hence avoiding detection easily.

8.2 Images

Moderating visual content can be easier than moderating text content. However, having clear guidelines and thresholds for moderation is essential.

Cultural sensitivities and cultural differences may also come into place, so it’ll be important to understand the specifics of your target markets in different geographies. It can also be challenging to review large amounts of images.

8.3 Video

Videos have become one of the most common forms of content today. Moderating the video and the comments posted under the video is no mere easy feat, but it is necessary. The whole video also needs to be screened before it can be approved for release. Moderating video content is also challenging because it often has multiple text types, such as captions and titles.

Think of the variety of content published every day, from news articles to blog posts to tweets to Facebook posts to Instagram stories, etc.

With Leap Steam, these can be done correctly – where the user-generated content will be reviewed, flagged and removed if deemed to have passed the thresholds for moderation or depending on your requirements.

9. Why Should I Outsource My Content Moderation Needs?

Outsourcing content moderation services are becoming an increasingly popular option among website owners with rapidly increasing online communities. Allowing an expert team to handle this technical aspect of community management can save a huge amount of time and resources.

As we have been conducting image moderation, video moderation, and text moderation for over a decade, their partners know they are going to have access to a workforce with extensive training and expertise.

10. Advantages Of Working With Leap Steam (Outsource To A Vendor)

- Leap Steam’s team will report and eliminate content that may be considered upsetting, offensive, or even illegal.

- Significantly reduce exposure to legal issues associated with security risks, trademark violations, and PR scandals related to scams, threats, and other illegal activity.

- Identify and delete content considered to be trolling, spamming, flaming, or hate speech.

- Protect your underage or vulnerable users from viewing inappropriate content.

- Monitor for and identify predatory behavior such as grooming.

- Diversity is one of Leap Steam’s core strengths, ensuring native speakers provide an understanding of local cultures.

- Make data-driven decisions about new services, products, or marketing campaigns based on comments posted about your site or brand.

- Utilize experienced and highly-trained personnel, who specialized in content moderation, allowing you to focus your talent and resources on growing your business.

- Content moderation teams that operate 24 hours a day, 7 days a week.

- Scalable coverage offers a cost-effective way to deal with times of high or low volume of traffic.

- The ability to integrate with our moderation tool via our API or we can adapt to your system to ensure smooth collaboration.

- Connect with your target demographics and develop marketing strategies based on accurate and insightful data.

Conclusion

While the topic of content moderation comes with advantages and disadvantages, it makes complete sense for companies with digital platforms to invest in this. If the content moderation process is implemented in a scalable manner, it can allow the platform to become the source of a large volume of user-generated content.

Not only will this source of information be regarded as valuable by other users, but it can help build trust between brand and consumer. The content supplied can also be of great value to those companies who wish to know more about the sentiment around their brand.

Content moderation will allow the platform to enjoy the opportunity to publish a great deal of content, while also ensuring the protection of its users from malicious and undesirable content.

To learn more about outsourcing your content moderation processes and the value that it can bring your business, contact Leap Steam for a free consultation and trial.

Frequently Asked Questions About What Is Content Moderation

What Is Content Moderation In BPO?

It refers to outsourcing services pertaining to content moderation. This is common for business owners with large online communities or multiple online platforms whereby user-generated content is published daily.

Can I Do Content Moderating By Myself?

Content moderation is not an easy job, but there is a lot at stake when it comes to the actions of the content moderation team. You need to be able to sift through the inherent vileness on the internet to determine if something is actually worth reading.

Hence, having prior experience in monitoring social media platforms and online communities is essential – which is why you should trust Leap Steam if you are looking into outsourcing for Content Moderation.

How Is Content Moderation Done?

Content moderation refers to reviewing and removing inappropriate content that users post online. The process entails applying pre-set rules for tracking content. If it doesn’t meet the guidelines, the content is flagged and removed.

Why Should We Engage Leap Steam For Content Moderation?

By engaging Leap Steam, you can use our content moderation solution by hiring a team of in-house human moderators to review your content. This means we will take care of the heavy lifting for you!

We can also integrate it into your existing moderation tool through our API, and we’ll take care of the rest for you. We can adapt our system to suit your needs so that we can collaborate smoothly. We provide the best solution for your specific needs, so let’s get in touch today!

Do not hesitate to Email us or Chat with us (24/7)

Leap Steam

Thank you for this